Artificial Intelligence (AI) is reshaping industries and creating new opportunities across the globe. As AI continues to develop, it becomes increasingly reliant on large datasets for training machine learning models. However, acquiring and maintaining these datasets can present significant challenges, especially when they contain sensitive information or reside in distributed locations. This is where Federated Learning comes into play.

Federated Learning (FL) is an innovative approach to machine learning that allows for the decentralized training of AI models across multiple devices or systems, without requiring the sharing of sensitive data. Instead of sending data to a central server for training, Federated Learning keeps data localized on devices (like smartphones, IoT devices, or edge nodes) and sends only model updates back to a central server. This allows organizations to collaboratively train models while ensuring privacy and security.

In this blog, we will explore the concept of Federated Learning, how it works, its benefits, challenges, and how organizations can implement Federated Learning for collaborative AI training.

What is Federated Learning?

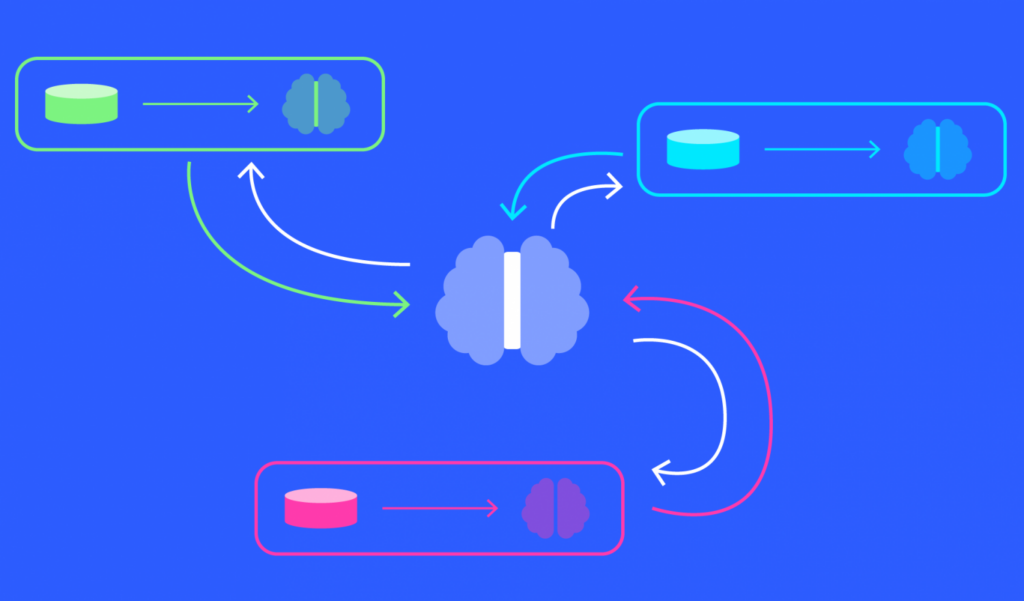

Federated Learning is a distributed machine learning technique that enables multiple participants to collaboratively train an AI model without sharing their local data. Instead of pooling data into a central location, the model is trained locally on each device or system, and only model parameters (such as weights and gradients) are sent back to a central server. The server then aggregates these updates and applies them to the global model.

This decentralized approach to training ensures that the raw data never leaves the individual devices or systems, preserving privacy and minimizing the risks associated with data sharing.

How Federated Learning Works

Federated Learning works through several key steps that involve multiple participants (also known as clients), a central server, and an AI model:

1. Model Initialization

The process begins with a global model that is initialized and distributed to participating devices or systems (clients). This model could be a neural network, decision tree, or any other machine learning model that needs to be trained.

2. Local Training

Each client trains the model locally using its own data. The data is never transmitted to the central server, ensuring that the raw data remains private and secure. Clients perform model training using their local datasets and compute the updates (gradients) based on the model’s performance.

3. Model Updates

After local training, clients send the updated model parameters (weights and gradients) back to the central server. The data itself is never shared, only the updates to the model.

4. Aggregation

The central server aggregates the updates from all clients and combines them into a global model. This aggregation process typically involves averaging the model updates from all participants. By doing so, the model benefits from the diverse data across clients, improving its accuracy and generalization capabilities.

5. Iteration

The updated global model is then sent back to the clients, who continue training the model on their own data. This process repeats for multiple iterations until the model reaches an acceptable level of accuracy.

Benefits of Federated Learning

Federated Learning offers a range of benefits, especially in areas where privacy, data security, and collaboration are essential.

1. Data Privacy and Security

Federated Learning’s most prominent advantage is that it keeps sensitive data on the local device, avoiding the need to send raw data to a central server. This ensures that personal information, such as medical records, financial data, or user behavior data, remains private and secure. As a result, organizations can adhere to data privacy regulations like GDPR, HIPAA, and others.

2. Reduced Data Transfer Costs

In traditional machine learning, transferring large datasets to a central server for training can incur significant bandwidth and storage costs. With Federated Learning, only model updates (which are much smaller than the raw data) are transmitted, significantly reducing data transfer costs and latency.

3. Collaborative Learning Across Organizations

Federated Learning enables multiple organizations or entities to collaboratively train AI models without sharing sensitive data. For instance, several healthcare institutions can work together to develop a more accurate predictive model for patient outcomes, all while maintaining the confidentiality of patient data.

4. Scalability

Federated Learning is highly scalable because it can support many clients (devices, systems, or organizations) training the model in parallel. Since the data remains localized, the model can learn from diverse and geographically distributed data sources, improving its performance and robustness.

5. Improved Generalization

Training a model on diverse datasets from different devices, geographies, or organizations helps the model generalize better. The model is exposed to various data distributions, making it more resilient to overfitting and capable of handling real-world variability.

Challenges of Federated Learning

Despite its many benefits, Federated Learning also presents several challenges that organizations must address when implementing it.

1. Data Heterogeneity

In Federated Learning, clients may have very different datasets. For example, one client’s data might represent urban traffic patterns, while another’s might represent rural patterns. This heterogeneity can cause issues when aggregating model updates because the data distributions may be quite different. Careful aggregation techniques must be developed to ensure the model can effectively learn from diverse data sources.

2. Communication Overhead

While Federated Learning reduces the need to transfer raw data, sending model updates frequently still requires significant communication bandwidth. The central server must aggregate updates from many clients, which can be computationally intensive and time-consuming, especially when there are large numbers of participants.

3. Model Synchronization

Synchronizing models across multiple clients and a central server can be difficult. Since clients may have different computational capacities and network conditions, there might be a delay in receiving updates from all participants. Additionally, the frequency of updates can affect the model’s performance and efficiency.

4. Security and Privacy Concerns

Although Federated Learning ensures that data remains private by design, there are still potential security risks. For example, adversaries could attempt to manipulate the model updates by introducing malicious model updates, a type of attack known as Model Poisoning. Implementing secure aggregation protocols and robust anomaly detection mechanisms is essential to mitigate such risks.

5. Limited Model Updates

Clients may not always have enough computational resources to update the model frequently or in an optimal manner. Clients with limited processing power or unreliable internet connections may have difficulty participating in the training process effectively. This leads to inconsistent model updates and potential delays in convergence.

Implementing Federated Learning: Key Steps

Implementing Federated Learning within an organization or across multiple partners requires careful planning and execution. Below are the key steps to successfully implement Federated Learning for collaborative AI training:

1. Define the Problem and Objectives

The first step in implementing Federated Learning is to define the specific problem that the AI model is intended to solve. This includes identifying the task (e.g., classification, prediction), the dataset (e.g., text, images, sensor data), and the desired outcomes (e.g., accuracy, speed).

2. Select the Right Model

Choose a machine learning model that is appropriate for the task and can be efficiently trained using Federated Learning. Deep learning models like neural networks are commonly used, but simpler models like decision trees or gradient boosting machines might be more suitable in certain cases.

3. Choose a Federated Learning Framework

Several frameworks have been developed to facilitate Federated Learning. Some of the popular frameworks include:

- TensorFlow Federated (TFF): An open-source framework developed by Google for Federated Learning using TensorFlow.

- PySyft: A flexible framework that supports Federated Learning, privacy-preserving machine learning, and more.

- Federated AI Technology Enabler (FATE): A framework designed for building Federated Learning systems, mainly in the finance, healthcare, and government sectors.

4. Prepare Data and Devices

Ensure that the data for training is distributed across various devices or systems, with adequate privacy measures in place. This might involve setting up edge devices, smartphones, or IoT devices to participate in the training process. Devices must also be equipped with the necessary computational resources to train models locally.

5. Train and Aggregate Models

Begin the training process by sending the initial model to the clients. Clients will train the model using their local data and send the model updates back to the central server. The central server will aggregate these updates, typically using techniques like Federated Averaging, and update the global model accordingly.

6. Monitor and Evaluate the Model

Track the progress of Federated Learning by evaluating the model’s performance at regular intervals. Ensure that the model is converging towards a solution and that privacy and security are maintained throughout the process.

Conclusion

Federated Learning represents a significant step forward in enabling collaborative AI model training while preserving data privacy and security. By allowing organizations to collaborate on training AI models without the need to share raw data, Federated Learning opens up new opportunities in areas such as healthcare, finance, and autonomous systems. However, implementing Federated Learning requires addressing challenges like data heterogeneity, communication overhead, and security concerns.

With careful planning, the adoption of appropriate frameworks, and robust privacy measures, Federated Learning can transform the way AI models are trained, making them more scalable, secure, and collaborative.